What Are the 4 Risk Levels in the EU’s AI Act – and What Are the Obligations?

- April 18, 2024

The EU’s AI Act differs between four risk levels in AI systems. They determine which obligations apply to the development or use of the AI system.

The 4 risk levels in AI systems are:

And we’ll tell you everything you need to know about them – and their obligations – in this blog post.

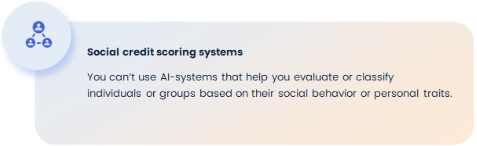

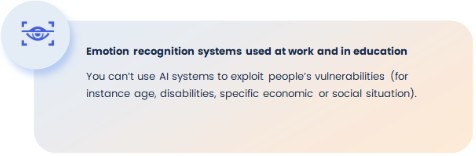

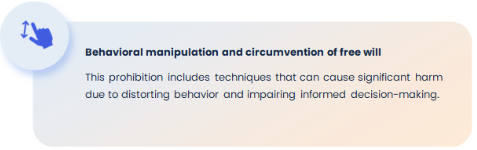

What are prohibited AI systems?

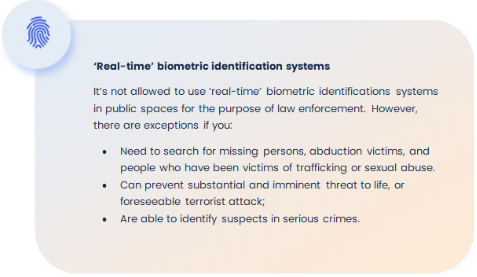

AI Act points out several AI systems that are illegal to use because they are seen as threats to people. In the category of prohibited AI tools, we find 8 systems:

What are high-risk AI systems?

After having the draft for the final text for the AI Act, we know that there are some nuances on when a system is high-risk.

High-risk AI systems are systems that harm health, safety, and fundamental rights.

Therefore, those systems that won’t be included here will be systems that only do limited actions or simply enhance an action performed by humans.

They are divided into two categories depending on whether the AI systems are:

- Used in products covered by the EU’s product safety legislation (including toys, aviation, cars, medical devices, and lifts) and are required to undergo a third-party conformity assessment under those Annex II laws

- Falling into the 8 following areas and must be registered in an EU database:

- Biometrics (used for identification and categorization of people)

- Critical infrastructure

- Educational and vocational training

- Employment, worker management, and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum, and border control management

- Assistance in legal interpretation and application of the law.

Besides, an AI system is always high-risk if it profiles individuals.

Key obligations for providers of high-risk AI systems

The most obligations in the AI Act apply to providers of high-risk AI systems. These obligations include:

- Implementation of a risk management system throughout the AI system’s lifecycle

- Conducting data governance

- Registration in public EU database for high-risk AI systems (only goes for AI systems used within the 8 areas in category 2 – see section High-risk AI above)

- Creating technical documentation to ensure transparency (for instance instructions for use and clear information for ‘natural’ persons)

- Designing logging capabilities and human oversight mechanisms in the systems (for instance by being easy to explain and interpret, auditable logs, and human-in-the-loop)

- Appropriate levels of accuracy, robustness, and cybersecurity (appropriate means, for instance, relevant, representative, free of errors, and complete)

- Conducting fundamental rights assessments

- Implementing a quality management system.

Key obligations for deployers of high-risk AI systems

Deployers of high-risk AI systems have different obligations regarding the use and monitoring of the high-risk AI system. These include:

- Ensuring human oversight and that the person in question has the appropriate competence

- Monitoring the operation of the high-risk AI system on the basis of the instructions of use

- Keeping logs for at least six months as a starting point

- Carrying out DPIAs.

What are limited risk AI systems?

AI systems with limited risk need to comply with minimal transparency requirements.

Therefore, you’re obliged to inform humans that they are interacting with an AI or that AI-generated content is labelled as such. This is based on the thought that people have the right to know when they’re interacting with AI or AI-generated content.

What are minimal risk AI systems?

The AI Act allows the free use of AI with minimal (or no) risk. This includes applications such as AI-enabled video games or spam filters. Most AI systems that are currently used in the EU are AI systems with minimal risk.

Now you know about the four risk levels in the AI Act – and the obligations that come with the use of them.

One thing is to know how you ensure responsible practice when it comes to the use of AI. Another thing is to actually act on it. We got you covered all the way with this practical guide to the AI Act – grab your own copy for free.